A brief Overview of the History of Conscious Spaces

/This article briefly reviews some of humanity's computational developments, and touches on some developments in neuromimetic architectural implementations and finally tying it all back to cutting edge opportunities for AI and the built environment.

More than two thousand years ago humanity developed what some argue is an analog computer called the Antikythera Machine. It is a clear example of human ingenuity, facility, and mathematical precision. More importantly the device begins humanity down a trajectory of computation and algorithmically informed decision making, which finally leads us to the artificial neural networks with which innovations in computation are dealing with today. It is believed that a device such as this was commissioned by and sought after by the kings and emperors of the time, as it would have provided information only available to the elite. Likewise the automata of Pierre Jaquet-Droz centuries later fall into the same pedigree and created a similar allure.

In 1843 Ada Lovelace changed everything by articulating the algorithmic process which may be described as the bedrock upon which modern computing has been built. Inspired by the development of the Analytical Engine by Charles Babbage, she elaborated on the possible futures that such a machine implied. In essence developing a language upon which such a machine could operate.

Innovation is often the product of competition, such is the case in war. World War II is no different. The warring factions sought to encrypt, intercept and decipher the messages sent in the war effort, thus the modern age of computation is born. Alan Turing and his team developed a machine designed to decipher the encrypted enemy messages. His machine took the prior developments to a new place. These developments begin to chart out a vein of logic which have helped to develop the trajectory of civilization as we know it. Later his work would develop what is known as the Turing Test which has become important in Artificial Intelligence research and theoretical computation.

Roughly two decades later, Cedric Price was commissioned to design a new space for the Gilman Paper Corporation, named The Generator Project. The project is arguably the seminal architectural project dealing with what Michael Arbib calls "neuromorphic architecture". This ambitious project even evaluated today. The objective was to create a space in direct dialog with its patrons. Responding to the users and the programmer actions/queries and provoking responses.

Price worked with John and Julia Frazer to develop the computational models upon which the project is based.

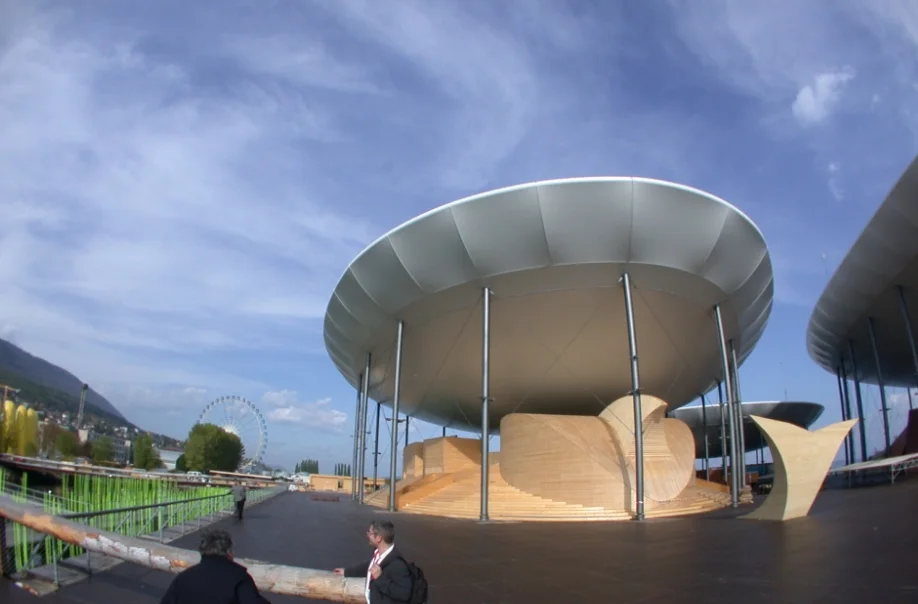

Ada Building - Zurich.

Year’s later, and much less ambitious, yet actualized project memorializing Ada Lovelace was conceived and executed for the Swiss national exhibition of 2002. The project set out to accomplish what Cedric Price had ambitions to do years earlier, the difference was one of computational capacity, and a much smaller scope. The ambition was to create a responsive dance floor, which encouraged a dialog between it and its users, through interactive lite floor tiles, other lighting effects, and music. If the dance floor became too crowded it would run one routine. It could run another routine to calm the mood and another to increase the tempo of the group.

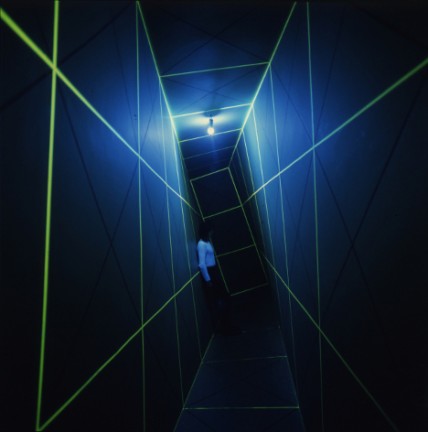

Ada Building: inside. Credit:www.architettura.it

Tononi, has developed a Integrated Information Theory attempting to quantify consciousness. He defines it as "integrated information" where an experience consists of informational relationships which have an n-dimensional relationships or volume. In this view consciousness is a measurable quantity similar to "mass, charge, or energy." Integrated Information Theory, has deep implications. It “quantifies the connectivity and intelligence of a particular neural network, and the degree to which it is self-aware”. This means that consciousness is quantifiable with an equation called Phi, which happens to be the name of Tononi’s latest book; where he works with a mathematician Christof Koch to develop, test and illustrate his findings. Essentially in their view consciousness is a spectrum upon which sufficiently sophisticated and self-aware systems have some degree of consciousness. This implies that even complex synthetic or simulated neural network systems have levels of consciousness. Following this logic one could argue that even if the system lacks being self-aware it does contain some aspects of integrated information or consciousness. This has been a pursuit throughout the ages: understanding or creating consciousness.

The ability for a system to be self-aware seems to be the aspect which humanity has struggled with. There are two recent developments which I believe have the ability to alter our relationship to such limitations: the exponential increase in sensors sophistication/ubiquity, the advances in machine learning/AI, the innovation of quantum computing, and the global interconnected database which is the internet (including increasing physicality of computing through mobile and internet of things developments, such as block chain innovations).

One compelling body of work addressing a move toward self-aware proto-biological spaces is that of Philip Beesley. His work is becoming increasingly sophisticated, incorporating advances in material and manufacturing processes, near living chemical system, and responsiveness through inter-connectivity and sensor data.

Aurora, Philip Beesley installation in Alberta. Credits www.philipbeesley.com

Using recent developments in machine learning as a part of the design process opens extensive opportunities for development of prototype versioning in order for the designer to realistically tease out and analyze innumerable possible configurations. Allowing for comprehensive real-time analysis of a greater set of possible parametric examples. The research team believes that this technique will aid in the discovery of unrealized configurations potentially with unforeseen benefits, or applications. Additionally, incorporating sensors into the assembly allows for an automatically actuated or responsive system. Eventually, this may lead to analytical feedback into the machine learning parameters applied to future configurations, and optimal techniques for interaction.

There is an opportunity to use the recently released OpenAI Gym project, which is an "open-source library" used to develop and test reinforcement learning (RL) algorithms or agents. Some of the environments lend themselves to use with the development of dynamic structures. In essence it allows the RL agent to explore configurations without preconception. This method is similar to current computational geometry tools in that it allows the designer to evaluate more iterations, but it takes the logic one step further. Because the RL agent uses four values to inform the iterative learning process: observation, reward, info, and done(used to reset the environment for another iterative experiment) This logic is based on a tried and tested "agent-environment loop. Each timestep, the agent chooses an action, and the environment returns an observation and a reward." The RL agents start with random actions and then through the feedback provided by the iterative learning process the RL agent is encouraged to make increasingly informed decisions over time. Current experiments have not been directed toward spatial or architectural problems, but the preliminary exercises show promise for their application toward dynamic structural systems.